Some researchers say anonymous data is a lie, and that unless all aspects of de-identifying data are done right, it is incredibly easy to re-identify the subjects.

Are you concerned by that statement? We are—because re-identification of data presents a significant privacy risk. It potentially exposes highly sensitive personal information that could impact a subject’s livelihood, reputation and relationships.

In 2019 researchers at the Université catholique de Louvain in Belgium and the Imperial College London made two significant findings:

- 99.98% of Americans would be correctly re-identified in any dataset using 15 demographic attributes such as age, gender and marital status.

- Even heavily sampled anonymized datasets are unlikely to satisfy the standards for anonymization under the GDPR and seriously challenge the technical and legal adequacy of the de-identification “release-and-forget model”.

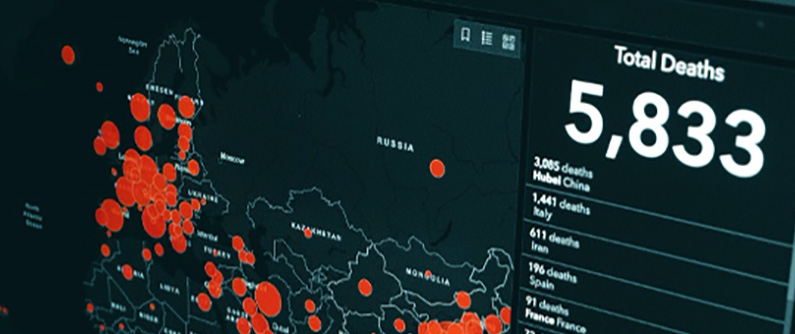

The starting point for a discussion about the risks of re-identification is obviously de-identification or anonymization of data. Well-intentioned organizations who want to share data with business partners or create open, public data sets (for example, for COVID-19 research purposes), may set themselves a goal of changing their source data to the extent that it could no longer be linked back to individuals. When an organization believes they have achieved this goal, they may then feel confident that they can “release and forget” the data.

But de-identification is widely regarded as a fallible process, which means it is not only possible to piece the data back together and identity the individual, it is scarily simple to do so, as the European researchers found. Their statistical model proves that currently popular methods of anonymizing data—such as releasing samples (subsets) of the information—fail to adequately protect complex data sets of personal information. They say the more attributes available in a data set, the more likely a match between data and individual is to be correct, and thus the less likely it is that the data can be considered “anonymized”. Therefore, they conclude that de-identification is generally ineffective and cannot be considered an adequate data protection control, at least by itself. Additional security controls should be put on the “de-identified” data sets, such as limiting which people and systems have access to the data.

Adding to the conundrum is that the researchers argue that even heavily sampled anonymized data sets are unlikely to satisfy modern standards for anonymization under privacy legislation such as the GDPR and CCPA. Under the GDPR, to be truly anonymous data, a data set must be “stripped” of enough elements that an individual can no longer be identified—and these recent findings prove that’s not possible.

One issue with traditional anonymization approaches is they don’t scale to the sheer volume of data being collected about us these days. The researchers’ earlier work on re-identification in behavioral data sets and credit card information showed it’s very unlikely that a general anonymization method can exist for “high dimensional” data, and the US’s President’s Council of Advisors on Science and Technology (PCAST) has agreed, stating that de-identification “remains somewhat useful as an added safeguard, but it is not robust against near‐term future re‐identification methods.”

What is the solution?

Data sets will always play a useful role in society, particularly in social, health and economic development. So how do we use data to foster innovation while better preserving personal privacy? Fortunately, there has already been some movement in this space, and de-identification is being phased out as a method of anonymizing data. In its place are what’s called privacy-enhancing technologies (PETs). Deloitte defines a PET as: “a coherent system of ICT measures which protect privacy by reducing personal data or by preventing undesired personal data processing … without losing the functionality of the information system”. PETs “use various technical means to protect privacy by providing anonymity, pseudonymity, unlinkability, and unobservability of data subjects.” Homomorphic encryption and pseudonymized data are examples of PET.

Until these technologies are widespread, we recommend that businesses apply the 7 Principles of Privacy by Design to their data processing systems. This includes limiting the data they collect and process, and ensuring that they process data consistent with the social license granted by their customers. We’ve written previously about how we take a minimalist approach to analytics with our MySudo product, which is a model that we believe balances the privacy of our users and our need to operate a business.

As individuals, we should all acknowledge that government regulation and technology is not at a point today where we can abdicate responsibility for controlling access to our personal information. Read more on how you can get started.

Photo by Clay Banks on Unsplash